Editor's note; this was a project that I started several years ago and left unfinished, so there is a large time skip in the middle of the article, and the project has changed considerably. Some parts in the beginning may contradict parts later on. Sorry. If you just want to see the code, it's here.

Building a network device manager in OCaml

I'm building a suite of services for running virtual machines in my home network. I am turning my home PC, my laptop, and a few machines I plan to rent from cloud providers into a cohesive, low cost, uniform system for building, deploying and maintaining software. I will write more about it in the future. For this post, I just wanted to walk through the process of using OCaml to call C functions, with the purpose of writing local services and utilities to run on a Unix system.

This will be more of a "build log", where I've tried to include any dead ends, errors, misconceptions, or other problems I encountered. My intention is to show others how they can dig themselves out if any holes they fall into. I've included most of the error messages I've encountered to help anyone doing a web search for error strings.

I'm also using this as a learning opportunity to get more comfortable with OCaml, so some of the abstractions I make may seem unnecessary; they probably are. But I'm deliberately trying to bump up against the areas of OCaml and its ecosystem that I don't understand very well yet, in the hope that in the future, if I do need to go there, I'll be able to fall back on this experience.

I'll be building two tools;

tapalloc- a privileged service that provisions network devices and an unprivileged client that retrieves file descriptors for those devices over a unix socket.tapautoconf- a service that uses netlink(7) to snoop on DHCP/ICMPv6 traffic coming from a tap device to configure IPv4/IPv6 addresses on it so they can receive traffic, allowing ipvlan-based devices to configure themselves.

The goal of these tools is to enable me to create VMs and containers with their own "real" IP addresses, on-demand, in a way that I find palatable.

Allocating network devices

The tapallocd programs allocate different kinds of TAP devices on behalf of a client process, and pass file descriptor(s) for the device to the client over a unix socket. These file descriptors can then be used by a hypervisor like qemu or firecracker as a VM's network interface. They are intended as an alternative to helper programs such as qemu-bridge-helper, and do not require a setuid-root binary. Ultimately I plan to re-implement the following pattern for acquiring other kinds of system resources:

- Client A connects to unix socket for allocator service B

- Allocator service B checks some policy to decide if A is

allowed access to the resource. It can query the

SO_PEERCREDoption to learn the uid, gid, and pid of the calling process. - If the check is successful, B allocates the resource, opens the resource files, and passes the open file descriptor to A over the unix socket.

- B closes its copy of the file descriptor, so that A retains the sole reference to the resource. Once A closes the file descriptor, explicitly or by exiting, the resource is collected.

This gives me the flexibility to perform permissions checks

of arbitrary complexity, and to really restrict the actions an

unprivileged process is allowed to take. As a comparison to the

capabilities(7)

system in Linux, giving a process the CAP_NET_ADMIN capability required

to provision and configure network interfaces grants permission to do

far more, like add or modify entries in the system's routing table.

On Linux, network devices are created and configured using the netlink(7)

interface. The Linux kernel supports an additional type of address family

for sockets, AF_NETLINK. A process that wants to create a network

interface opens a socket of this type and sends and receives messages over

the file descriptor. I will be interfacing with the libmnl companion

library, which will perform most of the nitty-gritty work of marshalling

and unmarshalling these messages.

Traditionally, when you wanted to call a C function from OCaml, you would

manually write a stub, a small

C library with intermediate functions that convert parameters from their

representation in the OCaml runtime to the required representation for the

system call (such as a char* or a struct stat and convert the return

value in the reverse direction. It is not unlike the process of extending

python with C.

Here

is an example of a stub I wrote to expose the vectored

IO system calls.

There is an OCaml package called ctypes that partially automates the generation of these "stub" files for you. Rather than writing the stub files directly, you write a smaller description of the C functions you would like to call, as an OCaml module, and the stubs are then generated from your description.

Project setup

I use GNU guix to provision my development environment. In the new repository I write the file guix.scm with a package definition, following this example:

(package

(name "tapalloc")

(version "dev")

(source (local-file "." #f #:recursive? #t))

(synopsis "") (description "") (home-page "")

(build-system dune-build-system)

(inputs

(list

ocaml-ctypes

ocaml-fmt

linux-libre-headers

libmnl

gcc-toolchain

pkg-config))

(license isc))

This describes a package built from sources in the current directory

using the dune build

system. The inputs list describes the dependencies needed to build

this package. With such a file in the project's root directory, I can

run the command

$ guix shell -D

which drops me into a new shell with the listed input packages available. With OCaml, most new projects manage their dependencies and packaging with opam. I use guix instead of opam because guix it is agnostic to any one programming language, it is easy to extend, it is easy to modify, patch, or replace dependencies, and it is easier to use it to get reproducible builds; unless I really mess up, someone else should be able to follow the same process I did and produce the exact same binary. Later on, I want to use guix as part of an automated build and test system. That said, the use of guix is still optional, and the README file contains instructions for building the package without guix.

With my dependencies loaded, I create the directory structure I want:

- The

libmnl/directory will contain source for theLibmnlOCaml module, which is a thin wrapper around libmnl. - The

tapalloc/directory will have the source for the executable programstapalloc-macvtapandtapallocd-ipvtap. - The

contrib/directory will contain an example script to run these programs unders6-ipcserver.

My usual process when starting a new project is to start with the smallest meaningful goal at first; a "hello, world" of sorts. This lets me validate the build process without getting bogged down trying to write the whole program from the start.

To start, then, I write a simple program, tapalloc/hello.ml,

whose only purpose is to open a netlink socket using the

mnl_socket_open

function, and exit.

(* hello.ml *)

let () =

match Libmnl.(socket_open NETLINK_ROUTE) with

| exception _ -> Fmt.epr "failure%!"

| sock -> Fmt.epr "success%!"

I can then put build instructions in tapalloc/dune:

(test

(name hello)

(modules hello)

(libraries libmnl fmt))

And start a test loop with the command

dune test -w

This test will re-run itself every time it detects a change in its dependencies. I keep this running in a visible window while I work on the project. In general, when I am working on a project in any language, I always have a visible window that is automatically updated with test or build results, so I can get constant feedback.

Unsurprisingly, the test fails with the error:

Entering directory '/src/dev.arroyo.cc/tapalloc'

File "tapalloc/dune", line 4, characters 12-18:

4 | (libraries libmnl fmt))

^^^^^^

Error: Library "libmnl" not found.

-> required by _build/default/tapalloc/hello.exe

-> required by alias tapalloc/runtest in tapalloc/dune:2

Had errors, waiting for filesystem changes...

As it says, the libmnl library does not exist. We can write libmnl/dune:

(library

(name libmnl))

Now the error changes to

Error: Unbound value socket_open

It's time to stop beating around the bush and define this library. With ctypes, there are 2 ways to call functions in a C library:

-

Loading the C library at runtime using

dlopen()and jumping into it. The function must first be described with theforeignfunction before it can be used. Assuming your description is accurate, the OCaml compiler will prevent you from calling the function with bad input. However, if your description is incorrect, you may have a run-time error, or worse. It is also not possible to use this method in a statically-linked binary. -

Using the Cstubs sub-package which will generate a

.cfile that you must compile and link into your binary, along with the external library. The benefit is that if your description is incorrect, the C compiler is likely to throw an error, because the generated.cfile will have an error. This method is also amenable to static linking. The drawback is increased complexity in your build process.

I prefer to statically link my binaries when feasible, and I want to catch

as many errors as I can at build time, so I am using the second option of

generating .c files. Luckily, the dune build tool makes this quite easy.

With the dune 3.0 and above, it even has a dedicated (ctypes ...)

stanza

that will build the stub files for you. It requires you to define two modules:

- A module named

Functionsdescribing functions in the external C library - A module named

Typesdescribing constants

I put these modules in a single file, libmnl/c_stub.ml:

open Ctypes

module Functions (F : Ctypes.FOREIGN) = struct

open F

type mnl_socket

let mnl_socket : mnl_socket structure typ = structure "mnl_socket"

let mnl_socket_open = int @-> returning (ptr mnl_socket)

end

module Types (F : Ctypes.TYPE) = struct

open F

let netlink_route = constant "NETLINK_ROUTE" int

let netlink_generic = constant "NETLINK_GENERIC" int

end

The libmnl library defines the mnl_socket struct in its header like so:

struct mnl_socket;

That is, external users of the library do not have access to the fields of the struct; it is a so-called "opaque struct". Such a struct maps well to OCaml as an abstract type (a type with a hidden implementation):

type mnl_socket

To describe a C function for the ctypes module, we make use of the

@-> operator and some helper functions. "@->" is meant to look

like the -> syntax that is used in ordinary OCaml function signatures.

If you squint, the description

let mnl_socket_open = int @-> returning (ptr mnl_socket)

Looks almost like an OCaml function signature, such as

val mnl_socket_open : int -> mnl_socket ptr

It is slightly different, though; in the ctypes description, the words

int, returning, ptr and mnl_socket are values rather than

types, as is the case in the OCaml function signature. The line

let mnl_socket : mnl_socket structure typ = structure "mnl_socket"

Unites the value mnl_socket with the type mnl_socket.

The two modules have the names Functions and Types. The rules

implementing dune's ctypes stanza are hard-coded to look for modules

with these names.

The Types module is currently empty, but it won't be for long; I will

populate it with constants as needed.

In libmnl/dune I add the (ctypes ...) stanza:

(library

(name libmnl)

(ctypes

(external_library_name libmnl)

(headers (include "libmnl/libmnl.h" "linux/netlink.h"))

(type_description

(instance Const) ;; symbols accessed under C.Const

(functor c_stub)) ;; look for c_stub.Types

(function_description

(instance Libmnl) ;; symbols accessed under C.Libmnl

(functor c_stub)) ;; look for c_stub.Functions

(generated_entry_point C))) ;; nest modules under module name "C"

At this point I got a nasty surprise in my test loop:

********** NEW BUILD (libmnl/c_stub.ml changed) **********

File "libmnl/_unknown_", line 1, characters 0-0:

In file included from /gnu/store/63qdzf5h8s815z4gqydqfm5wsc87qs8n-profile/lib/ocaml/site-lib/ctypes/ctypes_cstubs_internals.h:13,

from /src/dev.arroyo.cc/tapalloc/_build/default/libmnl/libmnl__c_cout_generated_types.c:4:

/gnu/store/63qdzf5h8s815z4gqydqfm5wsc87qs8n-profile/lib/ocaml/site-lib/ctypes/ctypes_primitives.h:16:10: fatal error: ocaml_integers.h: No such file or directory

16 | #include "ocaml_integers.h"

| ^~~~~~~~~~~~~~~~~~

compilation terminated.

Had errors, waiting for filesystem changes...

What is "ocaml_integers.h" and what did I ever do to it? Since the header name is fairly unique, I can search my system for it:

$ find /gnu/store -name 'ocaml_integers.h'

/gnu/store/g48m9g91754zssif06mn66gmjswq619i-ocaml-integers-0.7.0/lib/ocaml/site-lib/integers/ocaml_integers.h

So it is part of the ocaml-integers package. It is present in my dev shell:

$ ocamlfind query integers

/gnu/store/63qdzf5h8s815z4gqydqfm5wsc87qs8n-profile/lib/ocaml/site-lib/integers

So what's wrong? Looking at the dune build log, at $project_root/_build/log, here is the

command that dune attempts to run:

gcc \

-I/gnu/store/dj7kw3mqasw0rxdbm1gkajgsznhw8b4h-libmnl-1.0.4/include \

-I/gnu/store/sjkj3bi7nx8bd02i8z0d43b85xm76rj5-ocaml-4.14.0/lib/ocaml \

-I/gnu/store/63qdzf5h8s815z4gqydqfm5wsc87qs8n-profile/lib/ocaml/site-lib/ctypes \

-o /src/dev.arroyo.cc/tapalloc/_build/default/libmnl/libmnl__c_cout_generated_types.exe \

libmnl/libmnl__c_cout_generated_types.c

The problem is that the .../lib/ocaml/site-lib/integers directory is not

added to gcc's header search path. This does not happen when I am using

opam instead of guix. After a bit of trial and error I identified the

problem; the ctypes package, during its installation process, copies

this header file from the integers package into its

own installation directory, and the guix package was overriding

that behavior. I sent a patch

to Guix that removes this override, and for the time being, did a local

override like so:

(use-module

(ice-9 match)

(guix transformations))

(define ocaml-ctypes-fixbuild

(package

(inherit ocaml-ctypes)

(arguments

(match (package-arguments ocaml-ctypes)

((#:make-flags flags . rest) rest)))))

((package-input-rewriting `((,ocaml-ctypes . ,ocaml-ctypes-fixbuild)))

(package

(name "tapalloc")

(version "dev")

(source (local-file "." #f #:recursive? #t))

(synopsis "Allocates ephemeral TAP devices on demand.")

(description "")

(home-page "")

(build-system dune-build-system)

;; gcc is needed for stdarg.h

(inputs (list ocaml-ctypes ocaml-fmt linux-libre-headers libmnl gcc-toolchain))

(native-inputs (list pkg-config))

(license expat)))

With that digression out of the way, we get a new error message:

File "libmnl/libmnl__c_generated_types.ml", line 25, characters 24-31:

25 | let enum (type a) name ?typedef ?unexpected (alist : (a * int64) list) =

^^^^^^^

Error (warning 27 [unused-var-strict]): unused variable typedef.

The dune manual warns us about these errors in its example:

; ctypes backward compatibility shims warn sometimes; suppress them

(flags (:standard -w -9-27))

I hope that in the future, dune can automatically make these warnings non-fatal, or that ctypes can stop triggering them. Because the current solution encourages developers to copy build directives they don't understand, and it is unlikely they will know when suppression is no longer necessary. With the warnings suppressed, we finally get a real error:

File "tapalloc/hello.ml", line 2, characters 16-27:

2 | match Libmnl.(socket_open NETLINK_ROUTE) with

^^^^^^^^^^^

Error: Unbound value socket_open

The build of the c stub files completes, and we're now back to calling functions that do not exist yet. Just to confirm things are working, I change the test to call the generated C function directly:

(* tapalloc/hello.ml *)

let () =

match Libmnl.C.Libmnl.mnl_socket_open 0 with

| _ -> Fmt.pr "success!"

The next error is

ld: libmnl/liblibmnl_stubs.a(libmnl__c_cout_generated_functions__c_stub__libmnl.o): in function `libmnl_stubs_1_mnl_socket_open':

_build/default/libmnl/libmnl__c_cout_generated_functions__c_stub__libmnl.c:6: undefined reference to `mnl_socket_open'

collect2: error: ld returned 1 exit status

File "caml_startup", line 1:

Error: Error during linking (exit code 1)

The error complains about an undefined reference to mnl_socket_open, a

C function in libmnl. This shouldn't happen, as the library (.cmxa)

file generated for the libmnl module should contain the necessary

directives for the C linker:

$ ocamlobjinfo _build/default/libmnl/libmnl.cmxa | head

File _build/default/libmnl/libmnl.cmxa

Extra C object files: -lmnl -llibmnl_stubs -L/gnu/store/dj7kw3mqasw0rxdbm1gkajgsznhw8b4h-libmnl-1.0.4/lib -lmnl

And the libmnl.so library itself does indeed have a public symbol

mnl_socket_open:

$ readelf -sW $(guix build libmnl)/lib/libmnl.so| grep mnl_socket_open

33: 0000000000002340 93 FUNC GLOBAL DEFAULT 13 mnl_socket_open@@LIBMNL_1.0

73: 00000000000023a0 85 FUNC GLOBAL DEFAULT 13 mnl_socket_open2@@LIBMNL_1.2

So what gives?

I find the best thing to do in times like these is to find an example

build that does work, and iteratively change that example, one piece

at a time, until it looks like the build that does not work. At some

point, the build will break, and that will bring you closer to the root

cause. So, starting with the example in dune's documentation, I changed

from building a libmnl library, to building a libmnl executable

;; libmnl/dune

(executable

(name libmnl)

(libraries fmt)

(flags (:standard -w -9-27 -verbose))

(ctypes

(external_library_name libmnl)

(headers (include "libmnl/libmnl.h"))

(type_description

(instance Const) ;; symbols accessed under C.Const

(functor c_stub)) ;; look for c_stub.Types

(function_description

(instance Libmnl) ;; symbols accessed under C.Libmnl

(functor c_stub)) ;; look for c_stub.Functions

(generated_entry_point C))) ;; nest modules under module name "C"

and embedding tapalloc/hello.ml into this executable:

(* libmnl/libmnl.ml *)

open C

type bus =

| NETLINK_ROUTE

| NETLINK_GENERIC

let bus_to_int = function

| NETLINK_ROUTE -> Const.netlink_route

| NETLINK_GENERIC -> Const.netlink_generic

let socket_open bus = Libmnl.mnl_socket_open (bus_to_int bus)

let () =

match socket_open NETLINK_ROUTE with

| _ -> Fmt.pr "opened a netlink socket!\n"

This works!

$ dune exec libmnl/libmnl.exe

opened a netlink socket!

So it can build the executable when the c stubs are defined in the

same stanza in dune, but triggers a linker error when the executable

and library are separate. What's going on? Adding (flags (:standard -verbose)) to tapalloc/dune tells the OCaml compiler to print the

external commands it runs to link the program. Here's the command,

with a bit of formatting added and unimportant flags omitted:

1 gcc -o 'tapalloc/hello.exe' \

2 '-L$GUIX_ENVIRONMENT/lib/ocaml' \

3 '-L$GUIX_ENVIRONMENT/lib/ocaml/site-lib/integers' \

4 '-L$GUIX_ENVIRONMENT/lib/ocaml/site-lib/ctypes' \

5 '-Llibmnl' \

6 '/tmp/build_1e9f8a_dune/camlstartup085117.o' \

7 '$GUIX_ENVIRONMENT/lib/ocaml/std_exit.o' \

8 'tapalloc/.hello.eobjs/native/dune__exe__Hello.o' \

9 'libmnl/libmnl.a' \

10 '$GUIX_ENVIRONMENT/lib/ocaml/site-lib/ctypes/cstubs.a' \

11 '$GUIX_ENVIRONMENT/lib/ocaml/str.a' \

12 '$GUIX_ENVIRONMENT/lib/ocaml/site-lib/ctypes/ctypes.a' \

13 '$GUIX_ENVIRONMENT/lib/ocaml/site-lib/integers/integers.a' \

14 '$GUIX_ENVIRONMENT/lib/ocaml/site-lib/bigarray-compat/bigarray_compat.a' \

15 '$GUIX_ENVIRONMENT/lib/ocaml/site-lib/fmt/fmt.a' \

16 '$GUIX_ENVIRONMENT/lib/ocaml/stdlib.a' \

17 '-llibmnl_stubs' \

18 '-L$GUIX_ENVIRONMENT/lib' \

19 '-lmnl' \

20 '-Wl,--no-as-needed' \

21 '-lcamlstr' \

22 '-lctypes_stubs' \

23 '-lintegers_stubs' \

24 '-Wl,--no-as-needed' \

25 '-lintegers_stubs' \

26 '$GUIX_ENVIRONMENT/lib/ocaml/libasmrun.a' \

27 -lm \

28 -ldl

Do you see the problem? If you don't immediately see the problem,

don't feel bad; it was only after I had dug into this problem

for the better part of a day, almost given up, and taken a break

(and a drink) before I realized what was wrong. When I build the

libmnl module as a library instead of an executable, dune builds the

archive _build/default/libmnl/libmnl.a. Then, when it comes time to

build hello.exe, the archive's parent directory is added to gcc's

library search path with the -Llibmnl directive (line 5), before

the directive that adds the parent directory of the "real" library,

-L$GUIX_ENVIRONMENT/lib/libmnl.so (line 18). As a result, the -lmnl

directive tells gcc to link _build/default/libmnl/libmnl.a, not

$GUIX_ENVIRONMENT/lib/libmnl.so. The local library, which shadows

but depends on the external one, obviously doesn't have a symbol for

mnl_socket_open.

Changing the library name from libmnl to

mnl

does the trick. We've reached the first milestone, and our major build

troubles should be behind us.

The programs in the tapalloc project are pretty simple; the client reads a

file descriptor from a unix socket. The servers provision a single interface,

and send an open file descriptor referencing it over said unix socket. So, in

addition to the libmnl library, I will need functions to read and write

file descriptors over a unix socket.

Error handling

In C, like in OCaml, functions can only return a single value. This

raises the question of how to return an error from a C function. In OCaml,

you can raise an exception, or use a sum type like result:

# type ('ok, 'err) result =

Ok of 'ok

| Error of 'err

# let safe_incr num =

if x = Int.max_int then

Error "overflow"

else

Ok (x + 1)

;;

val safe_incr : int -> (int, string) result

While you could use a C union to mimic a result type, it would

require unsafe practices or the declaration of a union type for each

unique return type, and may impose additional memory management on the

caller of the function. Instead, C functions typically return a single

value like an integer or a pointer, and use a return value outside of

the function's range to signify an error. The caller can then check the

(sorta) global variable errno to learn more about the nature of the

error. For instance here is the signature of the read(2) function:

ssize_t read(int fd, void *buf, size_t count);

If an I/O error occurs, read should return -1 and set errno to

the value EIO. -1 is outside of the range of read(2) because it is

impossible to read a negative number of bytes. Functions that return a

pointer typically use NULL to signify an error. Functions that return

a floating point number may return HUGE_VAL or NaN (see the glibc

manual).

By default, the code generated by ctypes does not

check for errors, and just returns the return value of

the C function call. You can specify an errno_policy

of return_errno to signal to the Cstubs code generator that functions

should sample the value of errno after calling a C function and return

it. So, to add error handling for the mnl_socket_open function, I can

change its wrapper to this:

(* mnl/mnl.ml *)

let socket_open bus =

match Libmnl.mnl_socket_open bus with

| (p, errno) when Ctypes.is_null p -> Error errno

| (sock, _) -> Ok sock

To make the code a little nicer, I can change the signature of mnl_socket_open to:

- foreign "mnl_socket_open" (int @-> returning (ptr mnl_socket))

+ foreign "mnl_socket_open" (int @-> returning (ptr_opt mnl_socket))

This way, NULL is returned as None, and a non-NULL becomes Some p. Then

my wrapper becomes:

let socket_open bus =

match Libmnl.mnl_socket_open bus with

| (None, errno) -> Error errno

| (Some sock, _) -> Ok sock

There is another roadblock for us; ctypes provides the errno_policy

parameter for its code generator, but dune does not expose it in its

(ctypes ...) stanza. I wrote a

patch

and added it to my local dune by modifying guix.scm like

so.

Now I can augment the dune file like so:

(function_description

+ (errno_policy return_errno)

(instance Libmnl)

(functor c_stub))

Unfortunately, I'm met with a new error 🙃

ld: mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o: warning: relocation \

against `Caml_state' in read-only section `.text'

ld: mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o: relocation R_X86_64_PC32 \

against undefined symbol `Caml_state' can not be used when making a shared object; \

recompile with -fPIC

ld: final link failed: bad value

collect2: error: ld returned 1 exit status

Here is a partial diff of the .c file that builds the .o file referenced in

the error, with ignore_errno vs return_errno:

value libmnl_stubs_1_mnl_socket_open(value x1)

{

int x2 = Long_val(x1);

+ errno = 0;

struct mnl_socket* x5 = mnl_socket_open(x2);

- return CTYPES_FROM_PTR(x5);

+ value x6 = CTYPES_FROM_PTR(x5);

+ return ctypes_pair_with_errno(x6);

}

With the return_errno policy, the C function

now has to call ctypes_pair_with_errno to build

a (value, errno) tuple. This function, in turn, calls

caml_alloc_tuple,

which calls

caml_alloc,

which calls Alloc_small, which calls

Alloc_small_with_profinfo

which references the Caml_state symbol, which appears to be a

block of memory

holding all of the state for the current runtime domain. I can see

that the object file contains the following relocation records:

$ objdump -r _build/default/mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o

RELOCATION RECORDS FOR [.text]:

OFFSET TYPE VALUE

0000000000000015 R_X86_64_PC32 Caml_state-0x0000000000000004

0000000000000027 R_X86_64_PC32 Caml_state-0x0000000000000004

0000000000000039 R_X86_64_PC32 Caml_state-0x0000000000000004

0000000000000078 R_X86_64_PC32 Caml_state-0x0000000000000004

000000000000008d R_X86_64_PC32 Caml_state-0x0000000000000004

and these are not present when using the ignore_errno policy.

The error message suggests recompiling with the -fPIC option.

I can add it to the dune files:

(library

(name mnl)

(ocamlopt_flags (:standard -fPIC)))

However, this doesn't seem to make any difference, I still get the same

error. To investigate further, I can re-run the build with strace to snoop

on the ld command that gets run:

$ strace --trace=execve \

--string-limit=1000 \

--follow-forks \

--successful-only \

dune build

First, I can see that the specific gcc command that fails is this one:

gcc -shared -g -o mnl/dllmnl_stubs.so mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o

And if I look further in the strace output, I can confirm that, indeed,

mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o

was not compiled

with -fPIC. If I rebuild this object file by hand with -fPIC, the failing

command now works:

$ gcc -I$GUIX_ENVIRONMENT/{include,lib/ocaml,lib/ocaml/site-lib/bigarray-compat,lib/ocaml/site-lib/ctypes,lib/ocaml/site-lib/integers,ocaml/site-lib/stdlib-shims} -fPIC -g -o mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o -c mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.c

$ gcc -shared -g -o mnl/dllmnl_stubs.so mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o

$ file mnl/dllmnl_stubs.so

mnl/dllmnl_stubs.so: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, with debug_info, not stripped

And you can see the relocation type in the object file changed from

R_X86_64_PC32 to R_X86_64_REX_GOTPCRELX:

$ objdump -r mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o

RELOCATION RECORDS FOR [.text]:

OFFSET TYPE VALUE

0000000000000015 R_X86_64_REX_GOTPCRELX Caml_state-0x0000000000000004

000000000000002a R_X86_64_REX_GOTPCRELX Caml_state-0x0000000000000004

000000000000003f R_X86_64_REX_GOTPCRELX Caml_state-0x0000000000000004

...

So why was this only a problem with the return_errno policy? Honestly,

I am not sure; it seems like dumb luck, because with the ignore_errno

policy, the -fPIC flag is not used, but there are no relocations for which

gcc decides to use the R_X86_64_PC32 relocation type:

$ objdump -r mnl/libmnl__c_cout_generated_functions__c_stub__libmnl.o

RELOCATION RECORDS FOR [.text]:

OFFSET TYPE VALUE

000000000000001c R_X86_64_PLT32 mnl_socket_open-0x0000000000000004

000000000000002c R_X86_64_PLT32 caml_copy_nativeint-0x0000000000000004

I came up with a simple reproduction and submitted a bug report for dune. In the meantime, I can manually specify the flags for building the object files:

;; mnl/dune

(library

(name mnl)

(libraries integers)

(flags (:standard -w -9-27))

(ctypes

(external_library_name libmnl)

(headers (include "libmnl/libmnl.h"))

;; https://github.com/ocaml/dune/issues/5809

(build_flags_resolver

(vendored

(c_flags (:standard -fPIC -I%{env:GUIX_ENVIRONMENT=/usr}/include))

(c_library_flags (:standard -lmnl -L%{env:GUIX_ENVIRONMENT=/usr}/lib))))

I don't like having to put guix-related environment variables in the dune

file, as I want to keep the build files agnostic of the package manager.

But it will have to do for now. With this, dune build succeeds! I've tried

to submit fixes to the speed bumps I encountered, so eventually no one else

will have to hit them. As of 3 Jun 2022:

- Guix patch 55715, fixing the missing

ocaml_integers.herror has been merged upstream. - A fix for the

-fPICissue #5809 by @mbacarella has been merged, and is available in the 3.3.0 release. - My PR #5827 adding

errno_policysupport to dune's(ctypes)stanza, has been merged and will be available in the 3.4.0 release.

With build stuff out of the way, let's write the damn program ☺.

Exposing a subset of libmnl

Since I don't plan on making my libmnl bindings a standalone library, but

rather an implementation detail of the tapalloc programs, I will only expose

those functions which I need to implement my services.

I will also expose a few identifiers from

netlink.h

from the Mnl module, even though they are not technically part of libmnl.

For that, I need to add "linux/netlink.h" and "linux/if_link.h" to the

includes set in mnl/dune.

First, it's nice to define some helper functions to reduce some of the repetition for error handling:

(* mnl/mnl.ml *)

open struct

type errno = Signed.SInt.t

let string_of_errno n

let i = Signed.SInt.to_int n in

Unix.(error_message (EUNKNOWNERR i))

let check_neg1 : (int * errno) -> ('a, string) result =

function

| -1, e -> Error (string_of_errno e)

| v, _ -> Ok v

let check_none : ('a option * errno) -> ('a, string) result =

function

| None, e -> Error (string_of_errno e)

| v, _ -> Ok v

end

The open struct ... end idiom is a scoping trick; the symbols defined

within the anonymous block are available in the rest of the file, as if

they were defined at the top level, but will not be present in the public

interface of the module, and so cannot be used from other modules, and

will not show up in automatically-generated documentation. It is

a way for me to hide helper functions without maintaining an interface

file. With these

helpers in place, the socket_open and socket_close functions now become:

let socket_open bus = Libmnl.mnl_socket_open (bus_to_int bus) |> check_none

let socket_close sock = Libmnl.mnl_socket_close sock |> check_neg1

Now, regarding the creation of interfaces, the netlink API is sufficiently complex enough that, instead of reading it from top to bottom, I want to scope my search with some examples. Recently, the ever-useful strace has learned how to parse netlink messages, so I can simply run

doas strace ip link add ipv0 link wlan0 type ipvtap

And get back a readable sequence of messages that I can mimic in my program.

The ip tool sends the following netlink message to create the link, given the

command above:

sendmsg(3,

{

msg_name={

sa_family=AF_NETLINK,

nl_pid=0,

nl_groups=00000000

},

msg_namelen=12,

msg_iov=[{

iov_base=[

{

nlmsg_len=68,

nlmsg_type=RTM_NEWLINK,

nlmsg_flags=NLM_F_REQUEST|NLM_F_ACK|NLM_F_EXCL|NLM_F_CREATE,

nlmsg_seq=1654360314,

nlmsg_pid=0

},

{

ifi_family=AF_UNSPEC,

ifi_type=ARPHRD_NETROM,

ifi_index=0,

ifi_flags=0,

ifi_change=0

},

[

[{nla_len=8, nla_type=IFLA_LINK}, 4],

[{nla_len=9, nla_type=IFLA_IFNAME}, "ipv0"],

[{nla_len=16, nla_type=IFLA_LINKINFO}, [

{nla_len=10, nla_type=IFLA_INFO_KIND}, "ipvtap"...]]

]

],

iov_len=68

}],

msg_iovlen=1,

msg_controllen=0,

msg_flags=0

},

0)

The message consists of a struct nlmsghdr, indicating an RTM_NEWLINK

request, followed by a struct ifinfomsg which is blank (both AF_UNSPEC

and ARPHRD_NETROM are defined as 0), followed by 3 struct nlattr items

describing the type of interface. The netlink(7) protocol, like other

network protocols, is layered, and message payloads can carry messages in

another sub-protocol. In this case, the sub-protocol is rtnetlink(7). We need

to surface some of the structs, enums, and constants found in the

<linux/rtnetlink.h> header, after which we can compose a message with

the right combination of flags and attributes seen in the trace.

Laying out messages in memory

Netlink is a datagram-based protocol. A message is composed of a standard header:

The standard header is then followed by a payload, which can have varying sizes and fields. Several of these messages can be laid out next to each other in a single datagram. You could model a protocol like this in OCaml like so:

type 'a nlmsg = {

nlmsg_len : int32;

nlmsg_flag : int;

nlmsg_type : int;

nlmsg_seq : int32;

nlmsg_pid : int32;

payload : 'a;

}

However, while superficially it looks like a netlink message, the

memory layout is completely different; the int32 fields are actually

pointers to blocks containing an integer value, just as payload

will be for any non-int payload. As I was browsing the iproute2 source code,

I came across this pattern which was used a lot:

struct {

struct nlmsghdr n;

struct ifinfomsg i;

char buf[1024];

} req = {

.n.nlmsg_len = NLMSG_LENGTH(sizeof(struct ifinfomsg)),

.n.nlmsg_flags = NLM_F_REQUEST | NLM_F_ACK,

.n.nlmsg_type = RTM_NEWLINK,

.i.ifi_family = AF_UNSPEC,

};

While here it's mostly a syntactical construct, what struck me was the textual separation of the layout information and the actual message data. It made me think, "can I separate the layout information from the actual data?" As it turns out, you can, using Generalized algebraic data types (GADT).

While netlink is a datagram-oriented protocol, a single datagram can contain multiple messages of varying types. While netlink sockets, like other sockets, support the vectored sendmsg(2) and recvmsg(2) system calls that would allow me to spread parts of a message over multiple memory buffers, the libmnl library lays out messages in a single buffer.

The nlmsghdr structure in the netlink(7) API is, as the hdr suffix implies,

a header. That is, it shows up at the beginning of some piece of memory

that is potentially longer than just the header itself, and the header implies

some layout on the memory that follows it. The mnl library provides a

function, mnl_put_extra_header that, in C, takes a size_t and returns a

void pointer which you cast into the appropriate structure type.

The rtnl-link-set.c shows how the mnl_*_put functions place sections of

a message adjacent to each other by allocating from a shared buffer.

4 char buf[MNL_SOCKET_BUFFER_SIZE];

...

26 nlh = mnl_nlmsg_put_header(buf);

...

30 ifm = mnl_nlmsg_put_extra_header(nlh, sizeof(*ifm));

...

35 mnl_attr_put_str(nlh, IFLA_IFNAME, argv[1]);

I adapted this function to take a GADT, which determined the return type, instead:

type _ extra_header =

| Ifinfomsg : Ifinfomsg.t ptr extra_header

| Ifaddrmsg : Ifaddrmsg.t ptr extra_header

val mnl_nlmsg_put_extra_header : t. Nlmsghdr.t ptr -> t extra_header -> t

I mirrored this in my adaptation of the example:

...

let nlh = mnl_nlmsg_put_header buf in

let ifi = mnl_nlmsg_put_header nlh Ifinfomsg in

mnl_attr_put_str nlh IFLA_IFNAME Sys.argv.(1);

...

The mnl library takes care of updating the nlmsg_len field when you add

an extra header.

Unexpected (non-)constants

While building this example, I hit another error:

% dune test

File "mnl/_unknown_", line 1, characters 0-0:

libmnl__c_cout_generated_types.c: In function ‘main’:

libmnl__c_cout_generated_types.c:84:13: error: enumerator value for ‘check_MNL_SOCKET_BUFFER_SIZE_const’ is not an integer constant

84 | enum { check_MNL_SOCKET_BUFFER_SIZE_const = (int)MNL_SOCKET_BUFFER_SIZE };

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

(exit status 1)

Let's see what that constant is:

$ grep -h -r MNL_SOCKET_BUFFER_SIZE $(guix build libmnl)

#define MNL_SOCKET_BUFFER_SIZE (sysconf(_SC_PAGESIZE) < 8192L ? sysconf(_SC_PAGESIZE) : 8192L)

Reading

_build/default/mnl/libmnl__c_cout_generated_types.c

the code generates an expression for each constant, like this:

enum { check_RTM_SETLINK_const = (int)RTM_SETLINK };

but the expression

sysconf(_SC_PAGESIZE) < 8192L ? sysconf(_SC_PAGESIZE) : 8192L

is not a constant; sysconf(3) is a function. I tried many ways to fix this, but ended up re-implementing the expression in OCaml, and caching its result at program startup:

let mnl_socket_buffer_size =

match F.sysconf T.sc_pagesize with

| n, _ when n > 8192 -> n

| _ -> 8192

Refactoring

At this point I have learned enough and used the interface I've defined enough that I want to stop forward progress, go back and refactor things before they get out of hand. Here's a summary of the changes, in broad strokes:

-

I changed the

Mnlmodule name toNetlink, and put the library name prefixes back in the function names. ThusMnl.(socket_open NETLINK_ROUTE)became

Netlink.(mnl_socket_open NETLINK_ROUTE)or rather, the intended:

open Netlink mnl_socket_open NETLINK_ROUTEI did this because the module ended up including identifiers from multiple header files, not just libmnl.h, and since, in C-fashion, those identifiers' names had prefixes hinting where they came from, the module could be opened without the reader getting confused about where some name came from.

-

I made the API more OCaml-friendly; using variants instead of integers for enums, using

intfor all integers where 63 bits, and so on. Sometimes this mean re-implementing some of the things the mnl library was doing.

I also added another module, Sockfd, exposing the ability to

pass file descriptors over a unix socket. Unlike the libmnl bindings,

I wrote this one as a conventional C binding, with a hand-written

C stub.

Creating an interface

Lets try to create an interface. One of the first things I noticed on the libmnl docs was the text

You can access several example files under examples/ in the libmnl source code tree.

To the examples! I can download the libmnl source with guix like so:

$ guix build -S libmnl

/gnu/store/ihwm7imzz5cpwwpxlvnq4a59f2vs29xy-libmnl-1.0.5.tar.bz2

$ tar xf $(guix build -S libmnl)

$ cd libmnl-1.0.5

The file example/rtnl/rtnl-link-set.c contains an example of setting a link up or down. It shouldn't be too much of a stretch to replicate this example, and then iteratively change it until it does what I actually want.

I need to craft an RTM_NEWLINK message, described in rtnetlink(7). It should have the following attributes:

IFLA_LINK=(index of parent interface)

IFLA_IFNAME="tap0"

IFLA_LINKINFO=[

IFLA_INFO_KIND="ipvtap"

IFLA_INFO_DATA=[

IFLA_IPVLAN_MODE=IPVLAN_MODE_L2

IFLA_IPVLAN_FLAGS=0

]

]

After a bunch of hacking, trial-and-error, I got to the point where I had

a test setup using the dummy device driver like this:

An interesting property is that, although the dummy device driver

is designed to drop all packets, the design of the ipvlan and

macvlan drivers is such that communication between child interfaces

is possible even when the parent interface is a dummy device! This has

interesting potential for local-only networks, potentially with enormous

(64k) MTUs, which I could explore in the future, you know, for !!fun!!

The test was pretty fun to write, as it involved lots of forking and exec-ing back into itself in order to set up the ephemeral namespaces using unshare(1). It requires user namespaces to be supported on the system. I think in the future I will setup a User-mode Linux integration test so it can run against a specific kernel without requiring the host machine to support user namespaces (some admins disable this feature out of caution).

I wrote a bunch of pretty-printing functions so I could see the messages I was creating. One funny error I ran into looked like this:

->{nlmsg_len: 44 nlmsg_type: RTM_GETLINK ...} IFLA_IFNAME=dummy0

sent RTM_GETLINK message for dummy0

<-{nlmsg_len: 1452 nlmsg_type: RTM_NEWLINK ...}

{ifi_family: AF_UNSPEC ifi_type: ARPHRD_ETHER ifi_flags: 0x100c3 ifi_change: 0x0000 ifi_index: 2}

IFLA_IFNAME=dummy0 ... IFLA_INFO_KIND=dummy

<- {nlmsg_len: 36 nlmsg_type: NLMSG_ERROR ...}{error: 0 msg: {nlmsg_len: 44 nlmsg_type: RTM_GETLINK ...}

parent device "dummy0" has index 44

->{nlmsg_len: 92 nlmsg_type: RTM_NEWLINK nlmsg_flags: NLM_F_REQUEST|NLM_F_CREATE|NLM_F_EXCL...}

IFLA_LINK=44 IFLA_IFNAME=ipvtap19962 IFLA_INFO_KIND=ipvtap

<-{nlmsg_len: 36 nlmsg_type: NLMSG_ERROR ...}{error: -19 (No such device) ...}

req#1163551169 failed: No such deviceThis happened because I re-used the buffer that held the response for my

RTM_GETLINK request to receive the acknowledgement (an NLMSG_ERROR message

with error=0). The offset of the ifi_index field in the ifinfomsg

struct just happens to line up with the msg.nlmsg_len field in the

nlmsgerror struct. It was a silly error, and easily fixed by making a

copy. Eventually I will change the program so that it doesn't read into

sections of a buffer that are still in use.

After a bit more trial and error, my test was successfully creating an ipvtap device! 🎉

$ nsenter --target $(pgrep -f tapallocd_ipvtap) --net ip link

1: lo: mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: dummy0: mtu 1500 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 1a:4b:25:01:45:dd brd ff:ff:ff:ff:ff:ff

3: ipvtap21559@dummy0: mtu 1500 qdisc pfifo_fast state UNKNOWN mode DEFAULT group default qlen 500

link/ether 1a:4b:25:01:45:dd brd ff:ff:ff:ff:ff:ff But we're not done yet. We have to find the character device and yield file descriptors for it...

Getting the file descriptors

Typically, when you create a macvtap or ipvtap device, udev will

create files under /sys/class/net/$name and a character device at

/dev/tap$index. Depending on your configuration, it will probably be

owned by root. I installed the following udev rule:

SUBSYSTEMS=="ipvtap", OWNER="tapallocd", MODE="0600"

I could make the rule a little more targeted, perhaps matching some

prefix of the interface name, or the parent device. This cooperation

from udev also makes the testing story a little more complicated, as

an automated test will now depend on a device manager. There is also

now a race between the device node being created by udev and tapallocd

attempting to open it; tapallocd will have to wait for some notification

that /dev/tap* has been created. Another challenge is that the device

manager needs to be running in the same network namespace as tapallocd;

you cannot rely on the default device manager.

I want this daemon to "just work" and be able to operate mostly on its own, in its own network namespace. So my approach is to listen to a NETLINK_KOBJECT_UEVENT socket for the device creation event to learn its major+minor device numbers and call mknod(2) within tapallocd to create it. This would also allow us to create the character device file somewhere else, so we can unlink the device without having write permissions to /dev.

This sounds like a lot of work, adding an entirely new protocol to the program. I had trouble finding documentation about the protocol format, so I decided to go ahead and just see what it looked like myself. I made a simple loop that ran in a background thread during the test and just dumped everything it read from a NETLINK_KOBJECT_UEVENT socket to stderr:

let hexdump : int -> buffer Fmt.t =

fun len dst buf ->

(Fmt.hex ()) dst (len, CArray.get buf)

let dump_nl =

let nl = mnl_socket_open NETLINK_KOBJECT_UEVENT in

let* _ = mnl_socket_bind nl NETLINK_KOBJECT_UEVENT 0 1 in

let buf = create_buffer 32768 in

let rec loop () =

match mnl_socket_recvfrom nl buf with

| Error e -> Error (Unix.error_message e)

| Ok len ->

Fmt.epr "got kobject msg@."

Fmt.epr "%a@." (hexdump len) buf;

loop ()

in

loop ()

Running this, the add events were printed in my test's output:

got kobject msg

00: 6164 6440 2f64 6576 6963 6573 2f76 6972 add@/devices/vir

10: 7475 616c 2f6e 6574 2f69 7076 7461 7035 tual/net/ipvtap5

20: 3735 332f 7175 6575 6573 2f74 782d 3000 753/queues/tx-0.

30: 4143 5449 4f4e 3d61 6464 0044 4556 5041 ACTION=add.DEVPA

40: 5448 3d2f 6465 7669 6365 732f 7669 7274 TH=/devices/virt

50: 7561 6c2f 6e65 742f 6970 7674 6170 3537 ual/net/ipvtap57

60: 3533 2f71 7565 7565 732f 7478 2d30 0053 53/queues/tx-0.S

70: 5542 5359 5354 454d 3d71 7565 7565 7300 UBSYSTEM=queues.

80: 5345 514e 554d 3d32 3638 3332 00 SEQNUM=26832.

got kobject msg

00: 6164 6440 2f64 6576 6963 6573 2f76 6972 add@/devices/vir

10: 7475 616c 2f6e 6574 2f69 7076 7461 7035 tual/net/ipvtap5

20: 3735 332f 6970 7674 6170 2f74 6170 3300 753/ipvtap/tap3.

30: 4143 5449 4f4e 3d61 6464 0044 4556 5041 ACTION=add.DEVPA

40: 5448 3d2f 6465 7669 6365 732f 7669 7274 TH=/devices/virt

50: 7561 6c2f 6e65 742f 6970 7674 6170 3537 ual/net/ipvtap57

60: 3533 2f69 7076 7461 702f 7461 7033 0053 53/ipvtap/tap3.S

70: 5542 5359 5354 454d 3d69 7076 7461 7000 UBSYSTEM=ipvtap.

80: 4d41 4a4f 523d 3233 3400 4d49 4e4f 523d MAJOR=234.MINOR=

90: 3100 4445 564e 414d 453d 7461 7033 0053 1.DEVNAME=tap3.S

a0: 4551 4e55 4d3d 3236 3833 3300 EQNUM=26833.

It's a simple text-based protocol, with one event per datagram, and

fields delimited by a NUL byte. You can see that the second event,

which is adding the tap3 character device, includes the MAJOR (234)

and MINOR(1) variables, and the device name. This should be all I need to

call mknod(2).

To create the device I need the CAP_MKNOD capability. I can give it to the

process using setpriv(1)

$ exec doas setpriv \

--ambient-caps +mknod \

--inh-caps +mknod \

--securebits +keep_caps_locked,+no_setuid_fixup_locked \

--reuid $(id -u) --regid $(id -g) --init-groups \

env -i $(env) guix shell -D

$ setpriv --dump | grep capabilities

Inheritable capabilities: mknod

Ambient capabilities: mknod

$ dune test -w --no-buffer

Unfortunately, this doesn't work:

mknod /tmp/ipvtap5023: Operation not permitted

I suspect that the test loses the CAP_MKNOD capability when it uses unshare(1) to transition from the default namespace into its own user namespace, since if I use mknod(1) to manually create the character device outside of the namespace, it is successful.

An unforseen leak

While I was pondering what to do, I noticed another problem. One of the

core assumptions of this whole system is that I would be able to unlink

the device node from the file system, and then use open file descriptors

as references that would prevent the device from being deleted until

they were closed. With the approach I took of manually creating the

device nodes, I am able to create the nodes in directories where I have

write access, and unlink them without requiring write access to the

/dev directory.

Imagine my surprise when I found a /dev/tap3 file during my test! At

first I suspected udev was doing this somehow, but that seemed impossible;

udevd listens for device events on a NETLINK_KOBJECT_UEVENT socket,

and those should not receive events from other network namespaces.

I wrote a quick tool to simply dump everything it could read from a netlink socket to stdout, similar to the testing in previous sections. As I expected, I did not see events for the ipvtap devices unless I ran the tool within the same network namespace. The device permissions were also wrong:

$ ls -l /dev/tap3

crw------- 1 root root 234, 1 Jan 5 16:15 /dev/tap3

My udev rules would have changed the node permissions to 0660 and owner to

tapalloc. Using strace on udevd more or less proved its innoncence;

it wasn't calling mknod to create the file. After a bit more research

I realized it was devtmpfs; back in the 2000s there was a stretch of

time where devfs was removed from the kernel, and udev was responsible

for populating dev. I guess that state of affairs was too difficult,

because the concept was re-introduced in 2010 in the form of devtmpfs;

the kernel will post device nodes there for any driver that asks for it,

and its mounted by default at /dev on my system.

That throws a wrench in my plan. I'm not ready to configure my system

to run without devtmpfs. I can think of a few options:

- Run a device manager in the same namespace that removes the nodes from devtmpfs.

- Give tapallocd the privilege to remove these nodes itself.

- Patch the kernel to introduce a device analagous to /dev/net/tun, but for ipvtap devices. It would have to be a per-parent device, and new ioctls would be needed to configure the ipvlan-specific flags.

- Find some other way to track when the interface is no longer in use.

None of these options are great, but the least bad option, I think, is to rely on a device manager. So setup instructions will include steps to add udev/mdev/mdevd rules that fix the permissions of tap devices as they come online.

Because I want to keep my tests self contained, I will move the mknod(2)

calls out of tapallocd and into the test, where it will run a child

process that emulates a device manager, including the re-broadcasting

behavior that both udev and mdevd support. I use this behavior in

tapallocd as a synchronization tool, to wait until the device node

is ready. It runs as a child process of the test, forked before a new

user+network namespace is created, and looks like this:

let prefix = Bytes.of_string "add@/devices/virtual/net/" in

let rec loop () =

let n = Unix.recv uevent buf 0 len [] in

Fmt.(epr "got %a@." (hex ()) (n, Bytes.get buf));

if not (Bytes.starts_with ~prefix buf) then loop ()

else process (Bytes.sub_string buf 0 n)

and process s =

let props =

String.split_on_char '\000' s |> List.filter_map parse_kv

in

let name = List.assoc "DEVNAME" props in

let major = List.assoc "MAJOR" props |> int_of_string in

let minor = List.assoc "MINOR" props |> int_of_string in

let dev = makedev major minor in

let* () = mknodat devdir name 0o660 S_IFCHR dev in

Fmt.epr "udev: created device node %s@." name;

rebroadcast s

and rebroadcast s =

let _ = Unix.send_substring donefd s 0 (String.length s) [] in

loop ()

in

loop ()

This didn't quite work. It turns out the libmnl

library doesn't really allow you to send a multicast

message to a netlink socket, because the mnl_socket_sendto

routine does not pass an nl_groups field in the sockaddr_nl structure.

I had to use sendmsg directly and construct my own:

and rebroadcast n =

(* mnl_socket_sendto blanks out the groups field, so we

have to bypass it with our own msghdr *)

let open Ctypes in

let sa = make Sockaddr_nl.t in

Sockaddr_nl.(setf sa nl_family (int_of_family AF_NETLINK));

Sockaddr_nl.(setf sa nl_pid 0);

Sockaddr_nl.(setf sa nl_groups nl_group_id);

let iov = make Iovec.t in

Iovec.(setf iov iov_base (CArray.start buf |> to_voidp));

Iovec.(setf iov iov_len n);

let msg = make Msghdr.t in

Msghdr.(setf msg msg_iov (addr iov));

Msghdr.(setf msg msg_iovlen 1);

Msghdr.(setf msg msg_name ((addr sa) |> to_voidp));

Msghdr.(setf msg msg_namelen (sizeof Sockaddr_nl.t));

Msghdr.(setf msg msg_controllen 0);

Msghdr.(setf msg msg_flags 0);

sendmsg fdw (addr msg)

As an aside, since I re-wrote the tests from scratch many times throughout the course of this project. I eventually forgot why I did this and spent awhile re-discovering the same solution. The lesson here is that I should make more frequent check-ins to source control with commit messages and comments describing the issues I encountered.

Another issue I ran into was with sending file descriptors over a

Unix socket; I did this in a C stub because of how arcane the API was,

relying on lots of pointer casting and macros. The C stubs implement

two OCaml functions in the Sockfd module:

(** [send fds sock] sends the file descriptors in [fds] to the

process on the other end of the socket [sock]. *)

val send : Unix.file_descr array -> Unix.file_descr -> unit

(** [recv n sock] receives up to [n] file descriptors from [sock],

which must be a unix socket *)

val recv : int -> Unix.file_descr -> Unix.file_descr array

However, I was getting strange behavior. I would send two descriptors:

Sockfd.send [| fd1; fd2 |] sock;

But on the receiving side, I would only receive one:

let fds = Sockfd.recv 2 sock in

(* Array.length fds = 1 ??? *)

After some experiementation and research (strace was helpful here) the problem was that I was sending two control messages with one descriptor each, but on the receiving end, they were merged into one control message with two descriptors. I don't know if this is Linux specific behavior, so I rewrote the send function to put all of the fds into one message, and the recv function to support any arrangement of fds (up to SCM_MAX_FD) within any number of messages.

After lots more silly bugs, reading docs, reading the kernel code, I finally got to the end of the test:

tapallocd: char device tap3 for ipvtap21701 is ready

tapallocd: removing reference to device

tapallocd: opened 1 fds to tap3

tapallocd: sending fd 6

tapalloc: received fds [3]

tapalloc: moving fds [3] to [8]

exec /home/droyo/src/dev.arroyo.cc/tapalloc/_build/default/test/test_dummy.exe stage2

mac address is 12:b2:e1:ac:a9:db

got tap device with stats {dev: 66306, ino: 7262473, perm: 0640, uid: 65534,

gid: 65534, rdev: 59905}

ok

Unfortunately I still wasn't done; I found that the kernel was not destroying the network device after its device node and all references to it were gone. I thought about it some more and found that the most suitable option is the one taken by all the fashionable container managers out there; place the network interface in the client's network namespace. I did consider this initially and hesitated for a number of reasons:

-

I was concerned it would make it harder for me to implement my dhcp/slaac helper as it would need to use NETLINK_NETFILTER to snoop on packets from these interfaces, which are now in different namespaces. I think this can be addressed with the NETLINK_LISTEN_ALL_NSID option.

-

I didn't want to give the client, which probably had CAP_NET_ADMIN privileges in their namespace, control over the device if I didn't have to. Upon reflection, I was already giving them the ability to write raw ethernet frames into the device. Giving them the ability to bring the sub-interface up or down or assign addresses is not a big deal. Besides, I control the clients.

The work I did before to emulate a device manager is not wasted; obtaining file descriptors for the tap device will still be a privilege reserved for the server. It's also one less dependency for automated tests. Moving the device into the clients' namespaces is probably for the best, as it will establish some symmetry between VMs (which will use ipvtap devices) and containers (which will use ipvlan devices).

To start, I tried using the IFLA_NET_NS_PID attribute in my

RTM_NEWLINK request:

mnl_ifla_put nlh IFLA_NET_NS_PID peer_pid;

With this attribute, my test hangs here:

kernel accepted RTM_NEWLINK

waiting for device manager to create char device

If I peek into the client's namespace, the link exists:

$ doas nsenter --target $(pgrep -f tapalloc.exe) --net -- ip link show

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ipvtap8447: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN mode DEFAULT group default qlen 500

link/ether d2:09:23:6f:b7:bd brd ff:ff:ff:ff:ff:ff

So I suspect the "udev" emulator isn't getting notifications about this device because it's not in our namespace. I tried setting the NETLINK_LISTEN_ALL_NSID flag. From netlink(7):

"When set, this socket will receive netlink notifications from all network namespaces that have an nsid assigned into the network namespace where the socket has been opened. The nsid is sent to user space via an ancillary data."

Unfortunately, it did not work work; the device manager listening in tapallocd's namespace did not get any uevents. I could not tell if that was because the kernel did not consider the two namespaces to be linked (due to one ns having a sub-interface of a device in the other ns), or because the uevents are not properly tagged when being broadcast. Another downside of this approach is that in a real deployment, it would require the device manager to set the NETLINK_LISTEN_ALL_NSID socket option, which may require patching the software to support socket options. Perhaps I can fix that in the future. For now, I'll do the following:

- Bring the device up in tapallocd's namespace and obtain file descriptors

- Move the device to the client's namespace after it's ready

This two-step approach opens the possibility of leaking devices if something goes wrong between the device creation and its transfer to the client namespace. For example, the client cloud close the connection, or it could be in a namespace that the server doesn't have permission to create interfaces into. I am comfortable with this risk; I can write the server carefully to clean up the device if it can't be transferred, and have some periodic sweep for "dangling" interfaces. I can probably also work out some scheme to limit the total number of interfaces in the server's namespace at any given time.

Doing it this way, I finally get what I want; the network device is allocated, the client has open file descriptors to send and receive ethernet frames with, and the device's lifecycle is the same as the client's. I no longer need to perform any trickery with unlinking files. The proof of concept is complete!

In all honesty, this service could have been an execline script with the help of the programs in fdtools. This was really more of a warm-up; an easy, tractable problem to get me accustomed to the quirks and oddities of netlink and ctypes before getting to my real goal.

Along the way I finally understood how to use the Ctypes view function.

It's basically registering handlers to convert to and from C and OCaml

values when you pass a value to or receive a value from a C function.

As an example, the nfnetlink structures contain several fields which are

documented as holding values in network byte order (e.g. big-endian). For

example:

struct nfqnl_msg_packet_hdr {

__be32 packet_id; /* unique ID of packet in queue */

__be16 hw_protocol; /* hw protocol (network order) */

__u8 hook; /* netfilter hook */

} __attribute__ ((packed));

I adapted this struct like so:

module Nfqnl_msg_packet_hdr = struct

type t = [`Nfqnl_msg_packet_hdr] structure

let t:t typ = structure "nfqnl_msg_packet_hdr"

let packet_id = field t "packet_id" be32

let hw_protocol = field t "hw_protocol" be16

let hook = field t "hook" int

let () = seal t

end

What are be32 and be16 above? They're views over int:

let be16 = view int ~read:ntohs ~write:htons

let be32 = view int ~read:ntohl ~write:htonl

Meaning, whenever I read a field of type be32 (through getf or the

!@ operator for pointer dereferencing), I get back (ntohl value),

and whenever I write such a field, (htonl value) is written instead.

I had to write the ntoh* and hton* functions myself, although they

may be in one of the modules I'm already using. They were easy

enough:

let mirror width x =

let rec loop dst src = function

| 0 -> dst

| n ->

let lsb = src land 0xff in

let dst' = (dst lsl 8) lor lsb in

let src' = (src lsr 8) in

loop dst' src' (n-1)

in

loop 0 x width

let htons = if Sys.big_endian then Fun.id else mirror 2

let htonl = if Sys.big_endian then Fun.id else mirror 4

let ntohs = htons

let ntohl = htonl

After understanding view better I started using it in other ways, for

example for some enum-type fields that convert variants to and from

integers, but weren't usable with the TYPE.enum function for one

reason or another. For example, defining a family view for the address

family constants looks like this:

let family = view int ~write:family_to_enum

~read:(fun x -> family_of_enum x |> Option.value ~default:AF_UNSPEC)

And using it as a field in a structure allows me to do things like

let v = mnl_nlmsg_put_extra_header nlh Nfgenmsg in

Nfgenmsg.(v |-> nfgen_family) <-@ AF_UNSPEC;

Helping VMs help themselves - tapautoconf

Ipvlan (and ipvtap) devices are sub-interfaces of some other device. Sub-interfaces behave as if they're attached to a switch, and are able to communicate efficiently without the participation of the underlying device. If a packet is destined to an address that does not belong to a sub-interface, it will be transmitted by the underlying device. Similarly, when the underlying device receives a packet, if it's destined for a sub-interface, that interface receives it, otherwise, the packet is dropped.

The core challenge with using ipvlan devices is that all sub-interfaces share the ethernet (MAC) address of the underlying device. Traffic is multiplexed among the sub-interfaces based on the layer 3 (IPv4/IPv6) addresses. That means, in order to receive unicast traffic, an ipvlan device must have an IP address assigned to it. Without an address, they can only receive broadcast and multicast traffic.

I don't want to manually assign IP addresses to my VMs, and I don't want to invent my own IP address management automation. I want my VMs to acquire their own addresses from the existing automation in whatever network I happen to be in. That means supporting DHCP for IPv4 and SLAAC for IPv6. Since I also plan to use these VMs on my laptop, I also have the stipulation that I may not have control of the network, so I cannot rely on special DHCP configuration.

My strategy will be to implement a service that will "learn" the IP address(es) that an interface wants to use by snooping for DHCP and ICMPv6 traffic, and assign those addresses to the interface on its behalf. Because I need to know what interface the packet came from, simply listening for broadcast & multicast packets is not enough. I can use a feature of netfilter that allows a filtered packet to be queued to a userspace program. The program can even modify and re-inject the packet, which I will use later for an extra feature. This allows me to only intercept the packets I actually need, and netfilter will include metadata about where the packet originated, allowing me to associate a packet with the interface it came from.

Test setup

I need a test. The test is my "north star", some marker that will show

me if I'm going in the right direction. This setup will be slightly more

complicated than the tapalloc test from earlier.

Since I can't find a dhcp client that would work with tap devices

and isn't a full-fledged VM, I'll write a variant of tapallocd that

allocates ipvlan instead of ipvtap devices. I was planning to do

so anyway.

Dnsmasq acts as a stand-in for the dhcp/radvd services that run on the router in my home network, and is pre-configured with an IPv4 address pool and an IPv6 prefix which it will advertise for stateless autoconfig (no DHCPv6).

The dhclient process runs with the -n flag, which tells dhclient

not to actually configure the interface after obtaining an address. The

namespace containing the master dummy0 interface will have netfilter

rules installed that intercept ICMPv6 and DHCP traffic and enqueue it

to a netlink queue, which tapautoconf will listen on. The test will

be successful when tapautoconf is able to configure the IPv4 and IPv6

addresses that dhclient negotiates with dnsmasq.

To start, I will construct this topology from an automated test, setup

some netfilter rules to intercept traffic, and write a stand-in for

tapautoconf that just logs every packet it receives and what metadata

is available for it.

While setting up the test I hit an issue; I was attempting to create

ipvlan devices directly in the client's namespace, since the dance

required to obtain file descriptors for ipvtap devices was not necessary.

I did this by setting the IFLA_NET_NS_PID attribute to the pid of

the client in the RTM_NEWLINK message:

let (peer_pid, gid, uid) = Sockfd.peercred Unix.stdin in

...

mnl_ifla_put nlh IFLA_NET_NS_PID peer_pid

The peer pid can be retrieved with the SO_PEERCRED socket option. Despite setting this attribute, the network device was created in tapallocd's network namespace, rather than the client's. I wracked my brain over this problem for a long time. I was especially befuddled because in my prior test, before changing ipvtap to a 2-stage allocation method, I was able to create devices directly in the peer namespace. I knew this was supposed to work. I pored through the kernel source. I tried all kinds of experiments. I added more debug statements. I mechanically looked for differences between the working and non-working program, going so far as to re-arrange attributes in some desparate terror that the order would matter.

Ultimately, the issue was quite simple. The tapallocd program queries

the SO_PEERCRED socket option, which is a struct that looks like this:

struct ucred {

pid_t pid; /* process ID of the sending process */

uid_t uid; /* user ID of the sending process */

gid_t gid; /* group ID of the sending process */

};

It uses the peer's process ID to identify the network namespace to insert the network interface into. The problem was that peer_pid was not the pid of the process on the other end of the socket. How is that possible? Well.

The tapallocd programs are intended to run under a super-server

such as inetd, socat(1), or s6-ipcserver(8). The server

listens on the unix socket, and for each accepted connection, forks an

instance of tapallocd. A single tapallocd process serves a single

request.

I wanted my test to require minimal external dependencies, and when possible, I did not want it to write files to a file system, or to bind to network addresses on the system. The solution I came up with looked something like this:

let well_known_port = 42

let start () =

let fd = bg Sys.argv.(0) [| Sys.argv.(0); "listen" |]

Unix.dup2 fd well_known_port

(* runs in a separate process *)

let listen () =

let buf = Bytes.create 1 in

while Unix.(read stdin buf 0 1) > 0 do

let srv = bg "tapallocd" [| "tapallocd"; "--verbosity=debug"; "dummy0" |] in

Sockfd.send [| srv |] stdout

done

let connect () =

ignore (Unix.write_substring well_known_port " " 0 1);

let fd = Sockfd.recv 1 well_known_port).(0) in

Unix.dup2 fd (fd_of_int 6)

Unix.dup2 fd (fd_of_int 7)

...

let test () =

let c = connect () in

Unix.execvp "tapalloc" [| "tapalloc"; ... |]

let main () =

match Sys.argv.(1) with

| "listen" -> listen ()

| "test" -> test ()

...

I've ellided some details, but essentially, the test program repeatedly

exec's itself, using its first argument to choose what function to

call. A socketpair connects the file descriptor 42 to the standard input

and output of a child process that starts instances of tapallocd and

shares one end of another socketpair that is connected to tapallocd's

standard input and output. The test can then execute into the tapalloc

client, which expects the tapallocd service to be connected to file

descriptors 6 (for read) and 7 (for write). The numbers 6 and 7 come

from the UCSPI convention.

What I learned was, the SO_PEERCRED option contains the pid

(and uid, and gid) of the process which opened the socket, not

the process that happens to be writing to it. In my ipvtap tests, which

did not require a forking server since there was only one request, these

processes were one and the same. But with the "server" implementation

above, the process that creates the socket (in the implementation of the

bg function) is the process running the listen function, not the

process that calls connect. I changed the implementation to look like

this:

(* runs in background process *)

let rec listen () =

match Sockfd.recv 1 Unix.stdin with

| [| c |] -> (

(* tell client we've received the fd *)

ignore (Unix.write_substring c "." 0 1);

match Unix.fork () with

| 0 -> Unix.close c; listen ()

| _ -> (* new child process *)

Unix.(dup2 c stdin);

Unix.(dup2 c stdout);

Unix.execvp "tapallocd" [ "tapallocd"; "--verbosity=debug"; "dummy0" ])

| _ -> exit 0

(* called by test *)

let connect () =

let client, server = Unix.(socketpair PF_UNIX SOCK_STREAM 0) in

Sockfd.sendfd [| server |] well_known_port;

(* read one byte as a sign that server has received the fd *)

ignore (Unix.read client (Bytes.create 1) 0 1);

Unix.dup2 client (fd_of_int 6);

Unix.dup2 client (fd_of_int 7)

So rather than listen opening a socketpair on behalf of the process calling

connect, connect opens its own socketpair and shares one end with listen.

In hindsight, it makes perfect sense that the credentials in SO_PEERCRED would

be those of the process that opened the socket. After all, the same socket could

be dup'd to any number of processes in any number of namespaces, and treating

SO_PEERCRED as "the currently speaking user" is inherently racy.

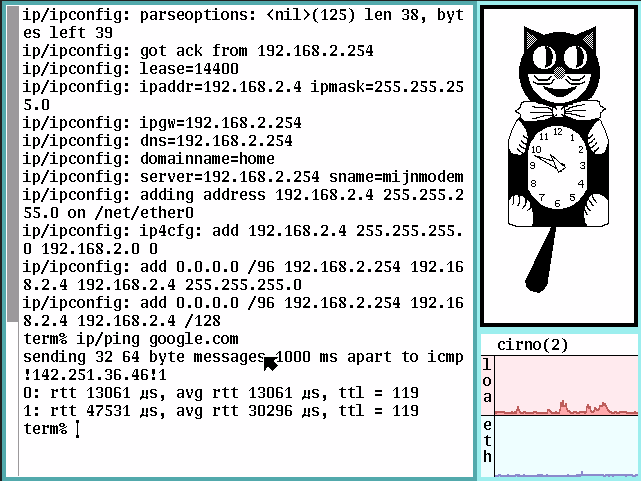

With that cleared up, the topology, at least, is in place. dclient -4

cannot obtain a lease, even though dnsmasq is offering it one:

DHCPDISCOVER on nic0 to 255.255.255.255 port 67 interval 4

Jan 10 20:11:11 dnsmasq-dhcp[27106]: DHCPDISCOVER(nic0) 0e:96:ef:9b:98:7b

Jan 10 20:11:11 dnsmasq-dhcp[27106]: DHCPOFFER(nic0) 192.168.0.119 0e:96:ef:9b:98:7b

No DHCPOFFERS received.

Unable to obtain a lease on first try. Exiting.

A packet capture makes things obvious:

62:9c:ad:b6:86:f2 > ff:ff:ff:ff:ff:ff: 0.0.0.0.68 > 255.255.255.255.67: BOOTP/DHCP, Request from 62:9c:ad:b6:86:f2

62:9c:ad:b6:86:f2 > 62:9c:ad:b6:86:f2: 192.168.0.1.67 > 192.168.0.121.68: BOOTP/DHCP, Reply, length 300

The DHCPDISCOVER request is broadcast, but the DHCPOFFER reply is unicast, addressed at the L2 layer to the mac address of the parent interface, and at the L3 layer to the IPv4 address being offered. Since the client does not have an L3 address configured, as an ipvlan device it cannot receive the reply. It's a chicken and egg problem. The strategy for making DHCP work, then, is to intercept these DHCPOFFER packets, configure the address they offer on the interface, then re-inject them. While netfilter would allow us to identify the interface that the DHCPDISCOVER offer originated from, it can't tell us what interface the DHCPOFFER was intended for. The tapautoconf program will have to match some information in the DHCPDISCOVER packet, such as the DUID, to information in the DHCPOFFER packet.

Let's insert tapautoconf into the mix. It is inspired by examples

in the libmnl and libnetfilter_queue C libraries. At this point, it

simply binds to a netfilter queue, and prints out every message it

receives. This is the main loop, so to speak:

let logmsg nlh =

Logs.info (fun m -> m "%a" pp_nlmsg nlh);

in

let rec loop () =

match Event.(sync (receive firehose)) with

| Ok q -> Queue.iter logmsg q; loop ()

| Error _ as err -> err

in

I have 3 threads set up; one for reading messages from netlink,

one for writing messages to netlink, and one for processing. They

communicate via Event channels

from OCaml's threading library. I really enjoyed setting up the event

loop. I think in any language or scenario, event loops are incredibly

fun to write, as you have to think about how to write it in a way

that keeps the system responsive to new events, while also avoiding

unbounded resource usage. The Event library is quite nice; it gives

you channels with send and receive operations, and a select

function that lets you choose between them:

val send : 'a channel -> 'a -> unit event

val receive : 'a channel -> 'a event

val select : 'a event list -> 'a

let event =

select [

receive channel1;

receive channel2;

receive channel3;

]

in

...

That may seem fairly limiting initially, as it only allows you to

select between channels that produce values of the same type. Luckily,

there is also the wrap function that can convert an event into another

type without waiting for the event to occur.

val wrap : 'a event -> ('a -> 'b) -> 'b event

So, I have a type that describes the different kinds of events that can occur:

type request = (Nlmsghdr.t * nlmsg_reply Event.channel)

type ioevent =

| Request of request (** request from another thread *)

| Response of nlmsg_reply (** response from netlink *)

| Delivered of mailbox (** a thread received its reply *)

| Flush (** messages were flushed to netlink *)

And helper functions convert send and receive actions into

this iovent type:

let ioevent_of_req r = Request r

let ioevent_of_rsp r = Response r

let ioevent_of_nop _ = Flush

let ioevent_of_ack m () = Delivered m

let recv_rsp ch = Event.(wrap (receive ch) ioevent_of_rsp)

let recv_req ch = Event.(wrap (receive ch) ioevent_of_req)

let flush_req wq ch = Event.(wrap (send ch (Queue.copy wq)) ioevent_of_nop)

let deliver m = Event.(wrap (send m.ch (Ok (Queue.copy m.q))) (ioevent_of_ack m))

So that my event loop looks like this:

let events =

[ recv_rsp from_netlink ]

|> (if not full then List.cons (recv_req request) else Fun.id)