As you have seen in other posts, I have been experimenting with IPVlan devices in l2 mode as a general way to multiplex the network interfaces on my Linux systems. They provide almost direct access to the networking hardware; There is no bridge to set up, no routes, less copying of data, and fewer moving parts. And they work in scenarios where the similarly-advantaged macvlan devices do not (like wifi).

The big caveat with ipvlan devices is that they all use the ethernet address of the parent device they are attached to. This property breaks most implementations of DHCP, DHCPv6, and Stateless Address Auto-configuration (SLAAC), because they all tend to use the device's L2 address to derive an L3 address. I am working on clearing that obstacle in a way that would work for most home networks, but that is separate to the topic of this post.

It occurred to me that even if I were to setup the infrastructure to get DHCP and SLAAC working, a 9front VM might take issue with seeing its L2 address in use by other endpoints on the network. So I decided to take a break from that work and do some testing on a 9front VM to make sure it wasn't all in vain.

Bootstrapping a development environment

I need a working 9front VM to make and test any necessary changes. I set up the following topology (graph generated by plotnetcfg):

On my systems, I put all of the physical interfaces in the "phy" network namespace. These interfaces are never used directly; instead, workloads which need network access, such as my login session, VMs, or other network services, will derive a macvlan or ipvlan interface from them. I do not have any routing set up; interfaces with different parent devices cannot communicate with each other.

I plan to make all of my derived interfaces ipvlan/ipvtap devices in the future, but macvlan is useful for now because it "just" works with DHCP and SLAAC configurations that assume a 1:1 relationship between L2 and L3 addresses.

The qemu process is a 9front VM that starts on boot, as a system service. Here is its run script:

#!/usr/bin/env -S execlineb -P

unshare --net

ontap -t macvtap --name=nic0 /run/ontap/port.eth0 --

importas NIC0_MAC ONTAP_HWADDR

importas NIC0_FD ONTAP_FD0

ontap -t ipvtap -n nic1 /run/ontap/port.dummy0 --

importas NIC1_MAC ONTAP_HWADDR

importas NIC1_FD ONTAP_FD0

if { ip addr add 10.9.0.9/32 dev nic1 }

if { ip link set nic1 up }

if { ip link set nic0 up }

s6-setuidgid glenda

qemu-system-x86_64 -m 2048 -smp 2 -nographic

-net nic,model=virtio,macaddr=${NIC0_MAC}

-net tap,id=net0,fd=${NIC0_FD}

-net nic,model=virtio,macaddr=${NIC1_MAC}

-net tap,id=net1,fd=${NIC1_FD}

-kernel /storage/glenda/9pc64

-drive file=/storage/glenda/9front.qcow2.img

-initrd /storage/glenda/plan9.ini

I wrote a service, dubbed "ontap" (name TBD as it clashes with netapp's OS), which allocates ipvlan/macvlan devices on behalf of processes connecting to it, and acquires and passes open file descriptors for their character devices (in the case of tap devices) over the socket as ancillary data (see cmsg(3)). This lets me separate the provisioning and usage of network interfaces.

The 9front kernel supports multiboot, allowing it to boot from a

standalone kernel that I've extracted from a 9front installation. The

-initrd argument is actually just a plan9.ini(8) file:

nobootprompt=local!/dev/sdC0/fs -A

console=0

user=glenda

nvram=#S/sdC0/nvram

sysname=cirno

auth=cirno

service=cpu

mouseport=ps2

monitor=vesa

vgasize=1920x1080x16

tiltscreen=none

The disk image is a standard 9front installation that is configured to

run as a standalone CPU + Auth + file server, which I configured by

following section 7 of the FQA. In the future, my VMs will be

diskless, and boot from a shared file system. I also have not decided

on how I want to populate DNS records for the addresses it acquires

on startup, so I modified /rc/bin/cpurc.local to print the contents

of /net/ndb to the console, and check the logs for the VM's IP address.

The console=0 line allows the console to be written to a log file, when

using qemu's -nographic flag.

With that in place, I can connect to the VM using drawterm:

NAMESPACE= drawterm -a 192.168.88.109 -h 192.168.88.109 -u glenda &

I have to unset the NAMESPACE environment variable to prevent

drawterm from attempting to talk to plan9port's factotum(4), which does

not support the dp9ik authentication protocol.

Now I have a usable development environment:

The ipvtap device that I want to test is the second network interface

attached to my VM. By default, it's not set up; I can add it to the existing

network stack at /net like so:

cpu% bind -a '#l1' /net

Then I should see a /net/ether1 directory with various files (see

ether(3)). As a sanity check, I

can compare the ethernet address:

cpu% cat /net/ether1/addr

62d8e2c37d94

with the output of ip link on the host:

$ doas nsenter -t $(pgrep -n qemu) --net ip link show nic1

8: nic1@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN mode DEFAULT group default qlen 500

link/ether 62:d8:e2:c3:7d:94 brd ff:ff:ff:ff:ff:ff

With that confirmed, I can configure an address (note: the same address is configured on the interface on the host side):

cpu% ip/ipconfig ether /net/ether1 10.9.0.9 255.255.255.0

And try to ping it from the host0 interface:

# ip addr add 10.9.0.100/24 dev host0

# ping 10.9.0.100

PING 10.9.0.9 (10.9.0.9): 56 data bytes

92 bytes from 10.9.0.100: Destination Host Unreachable

Ok, well it's too much to expect it to work the first time! While the ping was running, the following error was printed to the console:

arpreq: 10.9.0.100 also has ether addr 62d8e2c37d94

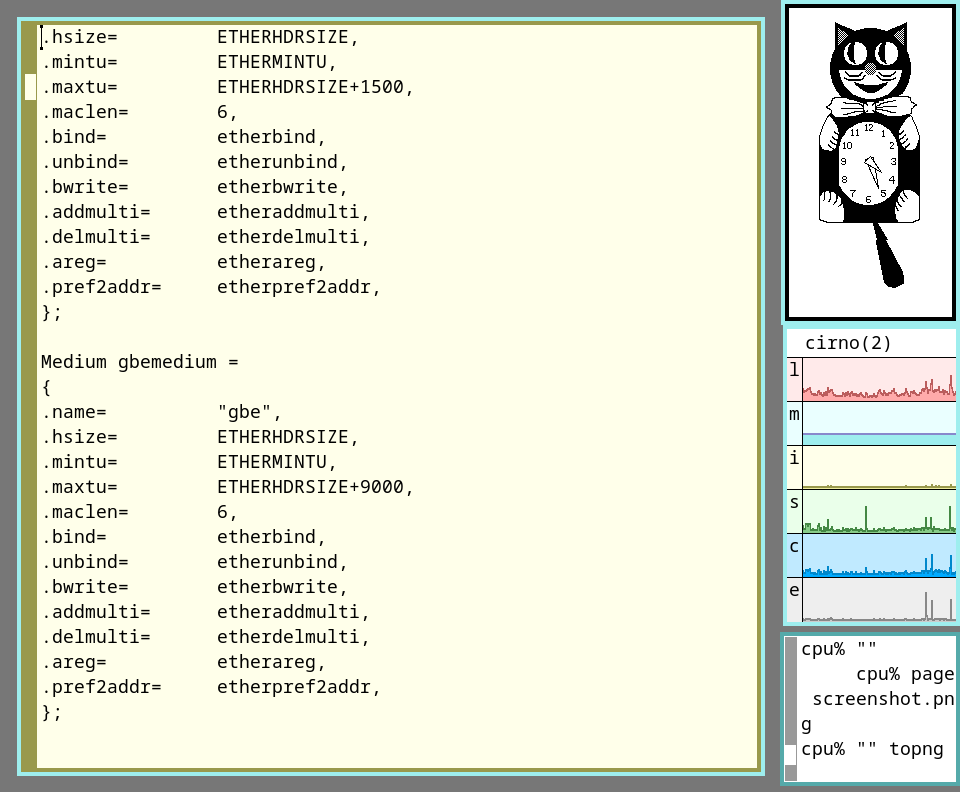

Searching to the error message brings us to recvarp in

/sys/src/9/ip/ethermedium.c:

static void

recvarp(Ipifc *ifc)

{

/* ... snip ... */

switch(nhgets(e->op)) {

/* ... snip ... */

case ARPREQUEST:

/* check for machine using my ip or ether address */

if(arpforme(er->f, V4, e->spa, e->tpa, ifc)){

/* ... snip ... */

} else {

if(memcmp(e->sha, ifc->mac, sizeof(e->sha)) == 0){

print("arpreq: %V also has ether addr %E\n",

e->spa, e->sha);

break;

}

}

This check looks like it would prevent an ARP reply from going out, so

removing it will be my first modification! After deleting the else

branch, I can rebuild the kernel:

cpu% cd /sys/src/9/pc64

cpu% mk

cpu% cp 9pc64 /mnt/term/storage/glenda/9pc64

Since I copied the new kernel over the old one, I can just fshalt to

shutdown the VM and let my service manager start up a new incarnation

of it. It's probably prudent to make a backup of the old kernel

before booting into its replacement, but we're living on the edge!

The error message is gone, but unfortunately, the ping still doesn't work. I can run a packet capture from the 9front VM to see if it's receiving pings:

cpu% snoopy -f 'icmp' /net/ether1

after optimize: ether(ip(icmp))

...

crickets. The "destination host unreachable" error suggests the Linux system was unable to learn the ethernet address that is using the IP 10.9.0.9. What if we look for ARP packets?

cpu% snoopy -f arp /net/ether1

after optimize: ether(arp)

008786 ms

ether(s=62d8e2c37d94 d=ffffffffffff pr=0806 ln=60)

arp(op=1 len=4/6 spa=10.9.0.100 sha=62d8e2c37d94 tpa=10.9.0.9 tha=000000000000)

011977 ms

ether(s=62d8e2c37d94 d=62d8e2c37d94 pr=0806 ln=60)

arp(op=2 len=4/6 spa=10.9.0.9 sha=62d8e2c37d94 tpa=10.9.0.100 tha=62d8e2c37d94)

So we see an ARP request (op=1) from the linux side asking for the

address of 10.9.0.9, and an ARP reply (op=2) telling them the L2

address. That seems to be working. But if we do the same capture on

the host level, from qemu's network namespace:

$ doas nsenter -t $(pgrep -n qemu) --net tcpdump -n -i nic1 arp

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on nic1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

22:43:25.945871 ARP, Request who-has 10.9.0.9 tell 10.9.0.100, length 28

22:43:26.951406 ARP, Request who-has 10.9.0.9 tell 10.9.0.100, length 28

22:43:27.971234 ARP, Request who-has 10.9.0.9 tell 10.9.0.100, length 28

22:43:29.950830 ARP, Request who-has 10.9.0.9 tell 10.9.0.100, length 28

We don't see the ARP reply. So it's getting lost somewhere between

snoopy's capture point (in the guest) and tcpdump's capture point (in

the host). To understand where snoopy's capture point is, we can

review its source, in /sys/src/cmd/ip/snoopy. In main.c we can see where the file is opened:

if((!tiflag) && strstr(file, "ether")){

if(root == nil)

root = ðer;

snprint(buf, Blen, "%s!-1", file);

fd = dial(buf, 0, 0, &cfd);

So snoopy, with these arguments, essentially executes

dial("/net/ether1!-1")

which, according to ether(3),

creates an ethernet "connection" (it's not a connection in the tcp/ip

sense, just a sink that incoming packets are copied to, and a place to

write outgoing packets) of type -1, which matches all packets. But

if the packet is delivered to this "connection", does that mean it's

also been transmitted to the underlying (virtio) device? The man page

points us to /sys/src/9/port/devether.c. The "entry point" of this file is the etherdevtab:

Dev etherdevtab = {

'l',

"ether",

etherreset,

devinit,

ethershutdown,

etherattach,

etherwalk,

etherstat,

etheropen,

ethercreate,

etherclose,

etherread,

etherbread,

etherwrite,

etherbwrite,

devremove,

etherwstat,

};

If we grep a bit:

cpu% cd /sys/src

cpu% g '^struct Dev($| *{)'

port/portdat.h:220: struct Dev

We can see this is standardized structure for devices. The two write

functions, etherwrite and etherbwrite, correspond to the write

and bwrite structure members, respectively. So if we read through

the code with the knowledge that the source and destination ethernet

addresses are the same, we can come up with the following sequence of

calls for an arp reply:

/sys/src/9/ip/ethermedium.c:/^recvarpproc\(/,/^}/ (long-running process)

for(;;)

recvarp(ifc);

↳ /sys/src/9/ip/ethermedium.c:/^recvarp\(/,/^}/

devtab[er->achan->type]->bwrite(er->achan, rbp, 0);

↳ /sys/src/9/port/devether.c:/^etherbwrite\(/,/^}/

etheroq(ether, bp, ðer->f[NETID(chan->qid.path)]);

↳ /sys/src/9/port/devether.c:/^etheroq\(/,/^}/

bp = ethermux(ether, bp, from);

if(bp == nil)

return;

/* ... */

qbwrite(ether->oq, bp);

if(ether->transmit != nil)

ether->transmit(ether);

↳ /sys/src/9/port/devether.c:/^ethermux\(/,/^}/

if(!(multi = pkt->d[0] & 1)){

tome = memcmp(pkt->d, ether->ea, Eaddrlen) == 0;

/* ... */

dispose = tome || from == nil || port > 0;

/* ... */

if(dispose){

Drop: freeb(bp);

return nil;

}

Outgoing packets are transmitted to the network device in the call to

ether->transmit in etheroq (which I read as ethernet

output queue), but they are gated by the ethermux function,

which drops packets which have the same destination address as the

ethernet device, after delivering them to any open connections (such

as the one held by snoopy(8) during a packet capture).

So we've arrived at my second modification:

- dispose = tome || from == nil || port > 0;

+ dispose = from == nil || port > 0;

It's a naive change, but the 9front kernel builds so fast, it is easier to make the change and test just to see if I'm on the right track. We've got a ping!

$ ping -n 10.9.0.9

PING 10.9.0.9 (10.9.0.9): 56 data bytes

64 bytes from 10.9.0.9: icmp_seq=0 ttl=255 time=4.679 ms

64 bytes from 10.9.0.9: icmp_seq=1 ttl=255 time=1.676 ms

64 bytes from 10.9.0.9: icmp_seq=2 ttl=255 time=1.875 ms

64 bytes from 10.9.0.9: icmp_seq=3 ttl=255 time=2.145 ms

64 bytes from 10.9.0.9: icmp_seq=4 ttl=255 time=1.719 ms

$ ip neighbor show

10.9.0.9 dev nic0 lladdr 62:d8:e2:c3:7d:94 REACHABLE

I can grab a diff of the changes I've made like so:

cpu% bind -ac /dist/9front /

cpu% git/diff /sys/src/9

I thought about whether allowing these packets to be transmitted to

the underlying hardware could cause a problem like a loop of some

kind. In other network contexts, I am used to calling the act of

submitting a packet that should be delivered back to oneself

"hairpinning", so I did some brief research on whether it was

supported on real switches, frowned upon, what problems could

arise, etc. I couldn't find much; it's a supported feature on

many cisco & juniper switches, as well as Linux's virtual bridge

switch, but in most cases it is disabled by default.

I couldn't think of any negative side effects from allowing this, but

I'm not sure how it should interact with bridge(3) devices and their bypass flag, so I

started a thread

on the 9front mailing list about it. Once patches started getting

shared, I figured it was time to start organizing them into git

branches. First I checked my own proof-of-concept patch into a

separate branch, ipvlan:

cpu% bind -ac /dist/9front /

cpu% git/branch -n ipvlan

cpu% git/commit /sys/src/9 ^ (/ip/ethermedium.c /port/devether.c)

Then I applied the patch shared on the thread on top:

cpu% hget https://felloff.net/usr/cinap_lenrek/loopback.diff | patch -p1

sys/src/9/ip/ip.c

sys/src/9/ip/ip.h

sys/src/9/ip/ipifc.c

sys/src/9/ip/ipv6.c

cpu% git/commit `{git/walk -c -f M /sys/src/9}

This patch doesn't replace mine, but restores the quality that "loopback" traffic, e.g. traffic which is destined to an L3 address on the same system (more precisely, the same IP stack) it was generated on, is not transmitted to the underlying ethernet device. I've uploaded these patches to sourcehut and will continue to test them.

update: an improved version of these changes has been committed to 9front.

Getting an IP address

I am working on a system service that will intercept and modify DHCP, DHCPv6, and NDP packets in order to make IP auto-configuration work seamlessly for ipvtap and ipvlan devices. However, it's not quite done yet. If I restrict myself to just IPv6 support for now, the Linux kernel provides me with everything I need; it will use its knowledge of ipvlan devices to derive a unique link-local address for each device, and that will also be used to configure a global address when router advertisements are received. If I don't worry about those IPs ever changing, I only need a way to tell the VM what its IP is at boot.

Here was my initial attempt at an execline script that populates the IP

addresses as environment variables set in plan9.ini through the

initrd argument to qemu:

#!/usr/bin/env -S execlineb -P

unshare --net --uts --mount --user --map-current-user --keep-caps

ontap -t ipvtap --name=nic0 /run/ontap/port.eth0 --

if { ip link set nic0 up }

importas -ui -S ONTAP_HWADDR

importas -ui -S ONTAP_FD0

backtick -E laddr { ontap-waitif --addr-in-net=fe80::/10 nic0 }

backtick -E raddr { ontap-waitif --addr-in-net=fc00::/7,2000::/3 nic0 }

backtick -E gw { ontap-waitif --has-route=::/0 nic0 }

fdreserve 1

importas -S FD0

fdmove $FD0 0

heredoc 0 "nobootprompt=local!/dev/sdC0/fs -A

user=glenda

sysname=cirno

laddr=${laddr}

raddr=${raddr}

gateway=${gw}

"

# The initrd needs to persist because qemu tries to detect its

# size.

backtick -E tmpfile { mktemp initrd.XXXXXX }

seekablepipe $tmpfile

fdswap $FD0 0

qemu-system-x86_64 -m 2048 -smp 2 -nographic

-net nic,model=virtio,macaddr=${ONTAP_HWADDR}

-net tap,id=net0,fd=${ONTAP_FD0}

-drive file=/storage/glenda/9front.qcow2.img

-kernel /storage/glenda/9pc64

-initrd /dev/fd/${FD0}

The ontap-waitif command above is something I wrote. It will wait

for some criteria to be met, like an address or route being present,

then it will print whatever matched that criteria to standard output.

If I were booting the VM from a file server I could have put the IP

configuration directly into the nobootprompt= parameter, but since

I'm still booting from disk for now, the laddr, raddr, and gw

variables are added to the boot scripts' environments. Within the VM

image, I created /rc/bin/cpurc.local which runs during the boot process

(see boot(8)):

#!/bin/rc

if (! ~ $#laddr 0) { ip/ipconfig -g $gateway ether /net/ether0 $laddr /64 }

if (! ~ $#raddr 0) { ip/ipconfig -g $gateway ether /net/ether0 $raddr /64 }

When I tried this I found that my run script was stuck at this line:

backtick -E raddr { ontap-waitif --addr-in-net=fc00::/7,2000::/3 nic0 }

This should wait for the kernel to configure an address in either

the global (2000::/3) or unique local (fc00::/7) address range, then

print that address to be substituted for the $raddr variable later in

the script.

After some more experimentation, I found that once the character device

for the tap interface is opened, neither the kernel nor any userspace

processes using the sockets API will receive any packets; the only way

to receive packets is by reading from those file descriptors. I considered

changing the way the ontap service worked, so that the client could delay

the opening of the character device until an address was configured. I also

considered finding or writing an NDP client that used the tap device.

In the end I decided to change the operation of the ontap service so

that the creation of the network interface and the opening of the

character device are separate operations, controlled by the client. I

thought this would be the most flexible option. Here

is the script I'm using (for now).

Testing performance

While it wasn't a major factor for me, it did appeal to me that, at least in theory, the performance should be better than a tap+bridge or tap+route combo. Let's test that assumption.

Traffic between two ipvlan devices attached to the same parent device is not transmitted to the parent device; instead, it is immediately received by the target device. Its throughput should be more of a measurement of the tcp implementation(s) than anything else.

First, I'll test throughput from the linux host to a plan9 VM running on that host. From the linux host, I'll start a process that just spews a stream of 0s to whoever connects to it:

$ s6-tcpserver :: 9999 cat /dev/zero

And from 9front, I can read it:

cpu% aux/dial tcp!2603:7000:9200:8c00:54a2:57b9:346b:487a!9999 | tput

... wait 60s for the rate to settle down ...

7.94 MB/s

not what I was expecting! This post has grown long enough, so you can read about the performance troubleshooting in part 2.

- See also

-

Debug log: Plan9port on sway

Nov 2024

Wayland debugging -

Writing a 9P server from scratch

Sep 2015

Using the plan9 file system protocol -

Build log: IP auto-config for ipvlan mode l2 devices

Feb 2025

Interfacing OCaml with netlink and C -

Build log: IP auto-config for ipvlan mode l2 devices, part 2

Mar 2025

Adding DNS support -

Plumbing rules for Puppet manifests

Mar 2014

Quickly navigating puppet modules with Acme